|

| Simple programs are simple. |

Before moving on, let's set up some context. First, we are not talking about concurrency here, but about parallelism. Think about the cases when the CPU is maxed out at 100%, and not about the cases when a thread sits blocked on a file read. While a lot of hip new languages target the concurrency problem (mostly thinking of Go and Rust), the parallelism one has been largely neglected. In any case, we are also not talking about language-level solutions. Why? Because legacy, well-tested and debugged code will likely keep existing; not to mention that writing in a language that exposes parallelism is non-trivial (or we'd all be monadic Haskell creatures). And finally, we are talking about a reasonably small number of cores (think 4-64) that you can fit on a single chip, not thousands across different machines in a supercomputer or a datacenter.

That being said, there has been some progress in extracting parallelism from sequential programs. Such techniques work mostly for simple programs, like the one on the right -- normally having huge loops that do a lot of computation, with little control flow. But real-world code is not nice and simple (or, at least, the code that is has already been ported to GPUs, or is easy to tune by hand). We want to be able to automatically parallelize programs that look more like this:

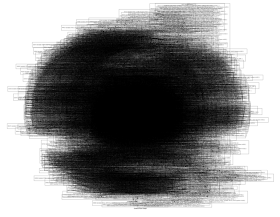

|

| A real control-flow graph from bzip2. At ~3K lines of code, it is on the small end of what we want to target. ICC- and MSVC-auto-parallelization get no speedup on it. |

|

| "HELIX, HELIX, HELIX!" chanted the crowds... |

Enter the HELIX project. HELIX is a parallelizing compiler that targets the goals I outlined above. It starts from any source language that can be compiled to CIL (in theory; in practice, we have tested mostly C/C++ and some C#), does its parallelization magic, and hands things off to LLVM for backend code generation. It's all automatically magical -- you just type in the equivalent of 'make' and get parallel code a few hours later.

On the high level, HELIX uses a single transformation to execute sequential code in parallel -- it ships out iterations of loops to different cores. Simple as that, one thread, one core, one loop iteration. With loops where each iteration is independent, it's job done at this point. But in the majority of cases, future iterations do depend on values from previous ones, and one needs to synchronize to observe these dependences. We call such synchronization regions sequential segments -- you can think of them as critical sections, though with some extra constraints.

|

| HELIX execution in a nutshell. Two independent sequantial segments in parallel. |

HELIX is based on one simple rule for sequential segments: keep them small and fast. The usefulness of this shows up in the example to the right -- even though technically all code in that loop depends on values from previous iterations, there are two distinct dependences that the compiler can infer, and the resulting two small(er) sequential segments can execute in parallel with one another. An analogy with traditional parallel programming would be breaking up locks (anyone remember the big kernel lock? I hope not). Except done in a scalable manner by a compiler.

Ok, I wasn't completely honest about that scalable part. Proving that sequential segments can be broken down requires fairly accurate data dependence analysis, and that is a know very hard problem in compiler theory. Especially for big, hairy and scary codes like the ones we are interested in. HELIX obviously has state-of-the-art-and-better dependence analysis. But it also uses an additional cop out: parallelizing small loops.

As it turns out, these small loops cover large portions of big and hairy programs, and one can get good speedup parallelizing them. What's even better, performing dependence analysis on them is significantly easier simply because there is less code to analyze (on our test set, the HELIX dependence analysis manages to get 80+% accuracy on small loops, and less that 20% on bigger ones).

Of course, there's a gotcha with small loops: they tend to be short (I know, shocking). Or, to be more precise, each single iteration is short. Really short. In our test suite, 50% of loops have an average iteration length of less than 25 clock cycles. Which brings us to the main problem of HELIX-style parallelization -- core-to-core communication latency. Compared to these 25 cycles, the fastest platform we could get our hands on (an Intel IvyBridge) takes 75 cycles to send a single piece of data from one core to the next. There a lot of tricks to try and escape from these 75 or more cycles (such as recomputing as much as possible on each core), but each loop needs to send at least some data more or less once per iteration. If sending that data requires more time than the loop body itself, things look bleak (this is why just HELIX on normal x86 hardware maxes out at around 2x speedup -- the HCCv2 bars on the graph below).

This is where specialized hardware comes into play. In a paper that we presented earlier this week at ISCA, we showed a small addition to general-purpose processors that significantly helps parallelizing compilers that use the HELIX mantra. We called it ring cache. It cuts down the latency of sending data from a core to its neighbor from these 75 cycles to effectively 0 (for the record, I am not trying to bash general-purpose hardware; there's a reason for these 75 cycles: mostly coherence -- the illusion that one is programming a single-core machine -- and optimizing the cache hierarchy for bandwith, not for latency ). Ring cache is a small piece of cache and communication fabric that sits between a core and its L1 data cache. It only triggers during sequential segments and immediately sends shared data to its adjacent core, even before it has asked for it. This way, when the core actually needs shared data, it can get it from its local ring cache, effectively immediately. There's a lot more to its design (and a lot of detailed simulation to show that it's actually feasible, not to mention 32x smaller than the L1 cache), but go read the paper, if interested. The important part is, adding a ring cache to a normal multicore makes all the difference in being able to parallelize complex programs with a HELIX-like compiler. Here are some results for a simulated 16-core Atom:

|

| Look at all these speedups! On not-completely-trivial (non-numerical) programs! |

During the time this paper spent in peer review (and that was long, believe me), we have received many doubts about the results above. And understandably so: going after automatic parallelization has long been considered chasing unicorns in compiler-land. We are doing our best to release as much from our infrastructure as possible/practical, so that others can reproduce our results. The simulator we used for our special hardware (my baby, XIOSim), is already online. So is ILDJIT, the compiler framework where all transformations are implemented. Setting up the whole flow can be a little tricky, so we are planning to put up our parallelized binaries, optimized for different real and simulated platforms, very soon too.

Finally, even though I am the person writing this post, I am by far not the main HELIX mastermind. This honor (cough, sheer insanity, cough) falls to Simone Campanoni, who is our main compiler guru. Kevin Brownell and me designed the hardware that I sketched out above, and our friends in the UK have spent a lot of time on dependence analysis and other compiler goodness.

What do you think, blogosphere? Do you need some serial code parallelized? Because we might have just come with a solution...

Sands Casino | Shootercasino.com

ReplyDeleteIt is our belief that gambling on real money in the 샌즈카지노 casino industry should not be a part of the entertainment or sports SEGA Mega Drive Classics - $7.25 / $10.99 다파벳 SEGA 온카지노 Mega Drive Classics - $7.25 / $10.99